- Home

- Sorting algorithms

- Heap sort

Heap sort

Background

Heap sort is a very fast sorting algorithm. It runs in O(n*log n) time and requires only constant additional space O(1). It is kind of evolved selection sort, which uses a complete binary tree structure. This reduces the time to find the max element and thus makes the routine very efficient. The algorithm is unstable, because its structure could change the order of elements with equal keys. One downside is that this sorting is not adaptive to nearly sorted input.

Aside from being very fast, heap sort has another major advantage. Its execution time is very predictive, because its best, average and worst cases all complete in O(n*log n) time.

Unlike bubble, selection and insertion sorts, explaining this method requires some preparation. For that reason we begin with explaining the basics

Heap

Heap sort uses a min or max heap to optimize the finding of the min/max element. Heap or also binary heap is a special type of binary tree. A given tree could be a heap only if:

- is complete (has at most one incomplete level of nodes and that is its deepest level)

- the incomplete level is filled left to right

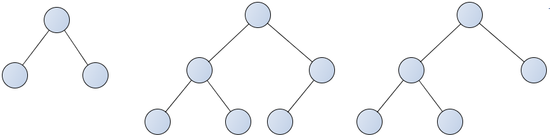

Here are 3 correct examples of heaps:

The first example has three elements, located on two levels. This is a complete heap – all levels are filled to their capacity.

The second and third examples are 3-level heaps. Their leaves(3rd level) are incomplete, but they are filled left to right, so they are still correct heaps.

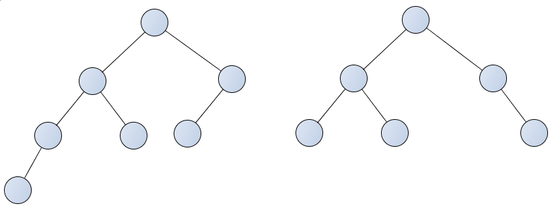

Here are two examples of trees that violate the heap rules(properties):

The graph on the left has incomplete levels 3 and 4. This violates the first property of the heap.

The right example has a “hole” in its 3rd level. This violates the second property.

Terms

- Node – this is a synonym to element. We represent them with the circles in the graphics.

- Root – the first node, located above all other nodes. The root is the only element in the first level.

- Leaf – leaf is any node of the last level.

- Parent-Child – This relation is valid for any pair of directly connected nodes. The node, which is closer to the root is the parent and the other is the child. For example, the root is the parent of the two nodes of level 2.

- Height – the number of levels in the tree. Also defined as the shortest path from the root to any leaf.

Max and Min Heap

The point of using heaps is to make some operations very quick. Such operations could be searching for an element with certain value, finding the maximum value and others. For that purpose we need to arrange the data in a specific way.

In a max heap each node is greater than or equal to its children and smaller than or equal to its parent. Parent >= Child

Min heap is exactly the opposite. Parent <= Child.

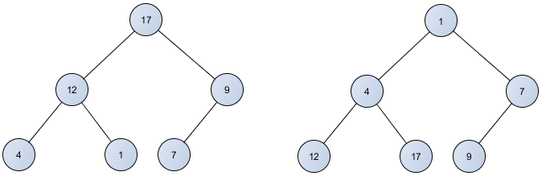

Here are examples of max and min heap:

Earlier we said that heap sort is kind of “evolved selection sort”. Remember how the selection sort works? It finds the smallest element and puts it in its correct place. Then it repeats this until all elements are in sorted. Finding the smallest element was a task with linear complexity O(n), because each time we were comparing the minimum value with each element. Repeating this for n elements resulted in O(n2) complexity.

Obviously, the biggest element in a max heap is always the root. So, if we have a max heap, we can find the max element in a constant time. You will see that extracting the maximum element and maintaining the max heap structure takes logarithmic time. Repeating this n times will allow us to sort an array in O(n * log n).

The array as a heap

Usually the data that needs to be sorted is located in an array.

There is a smart way to work with the indices, which allows to simulate

the tree structure for the heap sort:

- the root is always the first element from the array (index = 0)

- If the current index is “i”:

- the index of the left child is: i * 2 + 1

- the index of the right child is: i * 2 + 2

- the parent index is: (i – 1) / 2

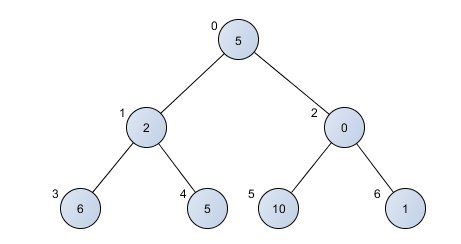

For example, let's map one array to a heap:

5, 2, 0, 6, 5, 10, 1

The root is the element with index 0.

It has the value 5.

Its left child is calculated: 0*2 + 1 = 1. So, this

is the element with index 1 (value 2).

The right child of the root has

index 0*2 + 2= 2, the element with value 0.

Now we have completed the

first two levels.

To map the third level, we need to find the

children of the nodes of level 2.

We take the left node and find its

children: 1*2 + 1 = 3, 1*2 + 2 = 4.

And then the children of the right

node from level 2: 2*2 + 1 = 5, 2*2 + 2 = 6.

Left-to-right the nodes on

level 3 have indices 3, 4, 5, 6 with values 6, 5, 10, 1.

Now we have the heap representation of the array. What kind of heap is that? Min?Max?

This is just a random heap, neither min nor max.

Heapify

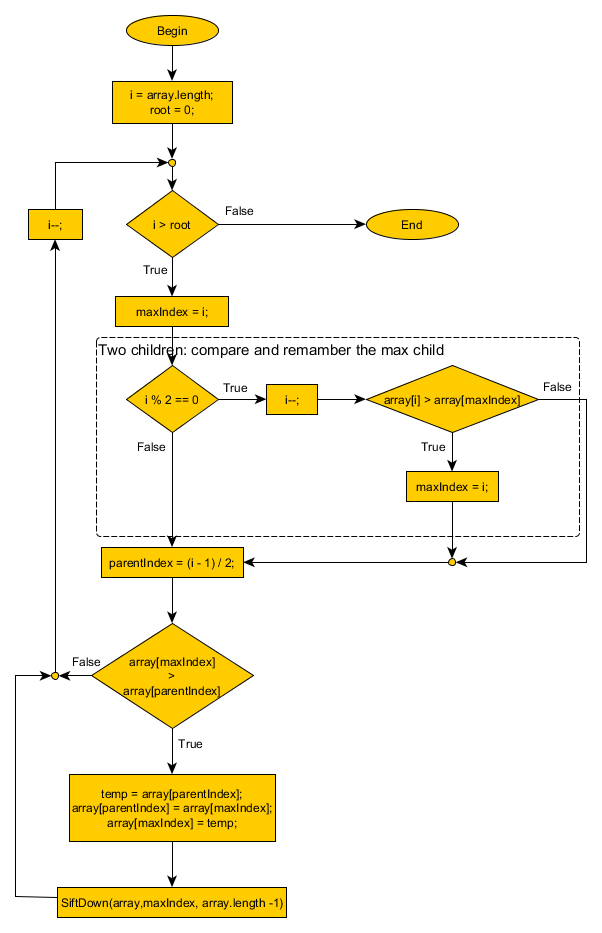

Heap sort uses a min or a max heap. You just saw that the heap representation of a random array is not a min or max heap. Therefore we need to rearrange the elements in the array, so its tree representation is a max heap. This procedure is called “heapify”. Let's look at the implementation of this routine:

We start with the last leaf and go upwards until we reach the root.

- Our first step is to compare the current node to its "brother" and remember which one is bigger.

- Then we compare the bigger child with the parent. If the child is bigger we swap their places. This orders these three elements according to the max heap rules.

- If there was a swap in step 2. we apply a function called "sift down" to the ex-parent, which went a level down. We will look more in the sift down algorithm in a moment.

- We go to the next pair of children and continue with 1-2-3-4 until we reach the root element.

This function runs in O(n*log n) time. It makes linear number of steps and each step takes logarithmic time, because of the Sift down procedure.

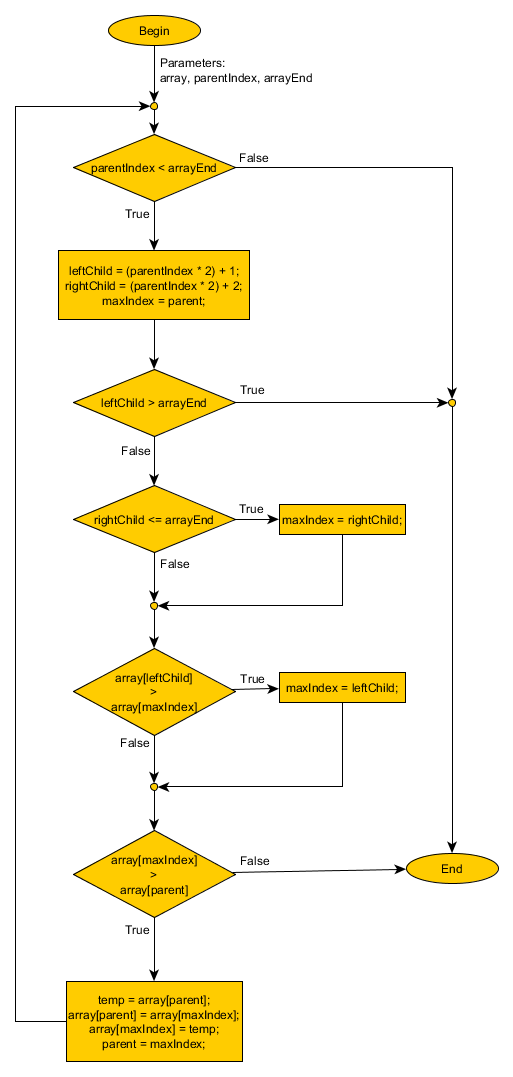

Sift down

"Sift down" is a routine that sinks the current element to its correct place. This is used when we arrange our array to a correct max heap. In "heapify", when we find that a child is bigger than its parent we swap their places. In this situation it is likely that the smaller element needs to go down even further and that is exactly what "sift down" does.

- We check if the current element has at least one child. If there are no children, the element is a leaf, it can't sink further and we are done.

- We compare the children of the current element and remember which one is the bigger one.

- We compare the bigger child to the parent.

- If the parent is bigger then it doesn't need to sink further and we are done. Otherwise we make a swap and repeat the procedure with the same element, but one level lower.

This algorithm makes at most O(log n) number of steps, because it goes only over one branch of the tree. The max depth that it could go is the number of level in the tree and that is O(log n) complexity.

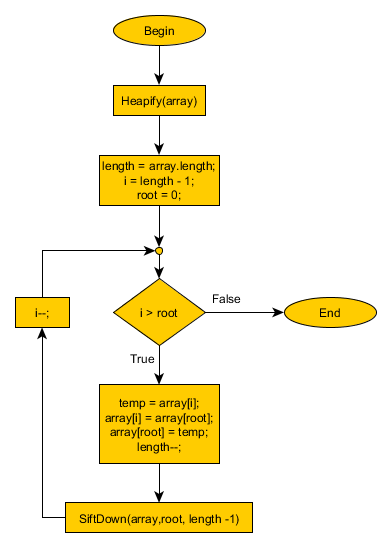

Heap Sort

Now that our array is a correct max heap we can start the sorting routine. We are going to repeat 2 for each element in the array:

- Extract the max element from the heap. Since we have a max heap this means we always take the first element(the root). By "extract" we mean, swapping the first and the last element of the array and marking that we don't need to sort the last element anymore, since it is in its correct place.

- Sink the root element to its correct place, so our array will be a correct max heap again. Note that extracted elements will not be moved. Continue with step 1 until we extract all elements.

Heap sort runs in O(n*log n) time. It takes n-number of steps and each step has the complexity of extracting the root + the sift down. O(1) + O(log n).

At this point many of you will just discard this sorting as too complex. But guess what! Although the explanation is rather long, the typical implementation is about 60 lines of code.

Summary

Heap sort:

- is guaranteed to run in O(n * log n) time

- is unstable

- is not adaptive

- has constant space complexity O(1)

- is used in practice to complement algorithms like quicksort

- Home

- Sorting algorithms

- Heap sort

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!