- Home

- Sorting algorithms

- Selection Sort

Selection sort

Background

Selection sort is an unstable algorithm. It has a best, average and worst case of O(n2). It is too slow for sorting big arrays. The algorithm has one major advantage – it makes only “n” number of swaps, where “n” is the number of elements to be sorted. It makes it useful when the swap is very expensive operation(for instance if the elements are large objects and their keys are small, or when using flash memory).

It also has acceptable performance on small arrays ( 0 < n < 10 to 20). Because of this, the algorithm could be used to sort the final steps in O(n*log2n) algorithms like quicksort, but most of the time insertion is used instead.

Algorithm

Selection sort makes n-1 number of passes through the array. In the first pass it finds the smallest element and puts it in first position. The second pass will skip the first element and find the next smallest item and put it in second position. This repeats until n-1 smallest elements are found and swapped to their places. The nth element is the last and it is already in place.

Before we look at the implementation of the algorithm, let's do one example.

Example

Sort the numbers (7, 9, 0, -3, 10) using selection sort. In this example the underlined number marks the position that is currently sorted i.e. the first position of the unsorted part. The bold numbers are the elements that have just been swapped in the last pass.

Pass 1: 7,

9, 0, -3, 10

1. min = 7

2. 9 < min? No.

3. 0 < min?

Yes => min = 0

4. -3 < min? Yes => min = -3

5. 10 <

min? No

6. Swap 7 and -3

Pass 2: -3,

9, 0, 7, 10

1. min = 9

2. 0 < min? Yes => min = 0

3. 7 < min? No

4. 10 < min? No

5. Swap 9 and 0

Pass 3: -3,

0, 9, 7, 10

1. min = 9

2. 7 < min? Yes => min = 7

3. 10 < min? No

4. Swap 9 and 7

Pass 4: -3,

0, 7, 9, 10

1. min = 9

2. 10 < 9? No

3. Swap 9 and 9

Did you notice? This algorithm can't distinguish between sorted and unsorted array. That is why it needed to do pass 4, despite the fact that it was already sorted. That is why the selection sort doesn't care what the input is – it will always perform the same steps. This is the reason its time complexity is always O(n2).

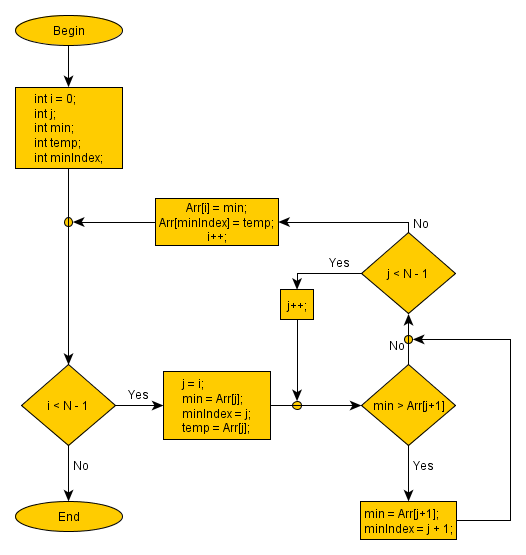

Selection Sort flowchart

Each step of the algorithm sorts one element. After 1 step there will be 1 sorted element and n-1 unsorted elements; after 2 steps - 2 sorted and n-2 unsorted and so on. This helps us think of the array as if there are two separate arrays – a sorted and an unsorted. Initially the sorted array has 0 elements and the unsorted – n elements.

To implement the selection sort we need two loops. The first loop will move the marker of the sorted part. The second loop will iterate through the unsorted part and find the next smallest element inside. In other words we need to nest the second loop in the first. Here is a flow chart to visualize the routine:

Stable implementation

Normally, the algorithm is not stable. It could be implemented to be stable, if necessary. For that purpose insertion is used instead of swaps. This way the relative order of the elements with equal keys will be the same after the sorting.

Note that insertion is inefficient operation, when performed on arrays. This could make the generally inefficient selection sort, extremely inefficient. The insertion is efficient and preferred operation when applied on data structure like linked lists.

In general, if you need a simple and stable sorting algorithm – use insertion sort.

Bidirectional

One optimization that can be done to the algorithm is to make it bidirectional. Each pass will find both the min and the max element. This will not save us any comparisons or swaps, but it will complete in less loop iterations. This means that the loop overhead will be halved.

Sometimes this variation is referred to as “shaker” or “cocktail” sort, but usually behind this name is a variation of the bubble sort.

Bingo sort

Another optimization is the bingo sort algorithm. It takes advantage of the fact that there could be more than one element with the same minimum value(key). After the minimum value is found, the bingo will loop through the array to find all elements with that value and move them in one pass.

On average this improvement does not change the O(n2) complexity. More precisely it has a best, average and worst complexity of O(n*m), where “m” is the number of unique values in the array. We should point out that its best case results in linear O(n) complexity, when the number of unique values is very small.

As we already noted the selection sort is not efficient on large number of items and its usage is limited to small arrays or lists. At the same time, small arrays are unlikely to have many duplicate values and the advantage of the “bingo” optimization is gone.

For this reason, its practical application is limited for some specific problems, where the array contains many duplicate values. For instance, if the range the of possible values saved in the array is small, compared to the number of elements.

Heap sort / Heapsort

The heap is probably the best optimization to the selection sort. In fact, it is so efficient, that we will discuss it in a separate article. In a nutshell, the idea is to arrange the elements in such a way, that finding the maximum / minimum element turns from linear to logarithmic complexity. As a result the complexity of the algorithm drops from O(n2) to O(n*log2n), which is close to the theoretical best that could be achieved.

Summary

Selection sort:

- is easy to implement.

- has constant space complexity O(1).

- makes only “n” number of swaps, which makes it useful in certain situations.

- could be efficient for small arrays 0 < n < 10 to 20.

- makes n2 number of comparisons, which makes it too slow for big arrays.

- can't recognize a sorted array and will always do all passes, even if the input is already sorted.

- is a base for the famous heap sort.

- Home

- Sorting algorithms

- Selection Sort

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!

Manage the football team of your city and make them champions!